Have you been looking for ways to develop the right camera shots for your dream game? The Unity Engine provides a huge number of camera options and perspectives. Designing the right scene requires careful engineering and construction of the right angles, while considering different perspectives. Yes, the engineering involved might look like a deterrent, but one way to cope well with this situation is to hire game app developers who are experts and have good enough experience of building Unity games.

Logic Simplified, supported by its team of diligent, hard-working developers and animators has perfected the art of creating true-to-life games. Our Unity game developers have spent countless hours getting the right camera shot developed for various games. When you partner with us, you know you’re partnering with the experts.

What are cameras in the Unity Shooter Games

While building Unity Shooter games, a Unity game development company creates scenes by arranging and moving objects in a three-dimensional space. However, this three-dimensional orientation cannot be portrayed, as it is, on computer screens, since those are two-dimensional. Thus, different views are needed to be captured and flattened. “Cameras,” in the context of Unity games, help accomplish this. You can hire shooter game developers to create a new shooting game mania for millions of fans out there.

In the Unity platform, a camera is an object that describes a view in a game scene. The placement of this object determines the point of view. Using the “Camera component,” the size and shape of the region that falls within the view can be decided. According to these set parameters, the camera “captures” a scene and displays it on the screen. When the camera object moves and rotates, the view on the screen also moves and rotates accordingly.

Although Unity supports several camera views, there are several factors to be considered when using these cameras. These factors are determined by the game, the gameplay, a particular scene, or gesture. Some of the factors that play an important role in camera determination and performance are as follows.

Lighting

Lighting effects in Unity work by simulating the behaviour of light in the real world. For more realistic results, detailed models of light behaviour are used, while for stylized results, simplified models are used.

Direct and indirect lighting

Unity has provisions for both direct and indirect lighting. Direct light is the one that is emitted from a source, hits a surface a single time, and is then reflected directly into a sensor (for example, the camera, in the gaming world, or the eye, in the real world). Indirect light can be defined as the light that, after emitting from a source, reflects from multiple objections and eventually falls on a sensor. This includes the light that hits surfaces several times and the skylight. Since unity provides provisions for both, realistic lighting results can be achieved by using Unity.

Real-time and baked lighting

Sometimes, lighting parameters need to be calculated at runtime. This is called real-time lighting. Unity enables adjusting lighting at runtime, which makes for a great development and playing experience.

Baked lighting is the lighting that has been predetermined by Unity by performing lighting calculations in advance. After performing the calculations, the results are saved as lighting data, which is then applied at runtime.

Unity allows using both real-time and baked lighting, or the combination of the two (called mixed lighting) depending upon the gameplay requirements.

Global Illumination

Global illumination is a combination of techniques that use both direct and indirect lighting to provide realistic lighting results. Unity allows two global illumination systems, which facilitate combining direct and indirect lighting. These are the Baked Global Illumination system and the Realtime Global Illumination system.

The Baked Global Illumination system comprises lightmapping, Light Probes, and Reflection Probes. The Realtime Global Illumination system comprises Realtime Global Illumination using Enlighten, and provides additional functionality to Light Probes.

Projections: perspective and orthogonal

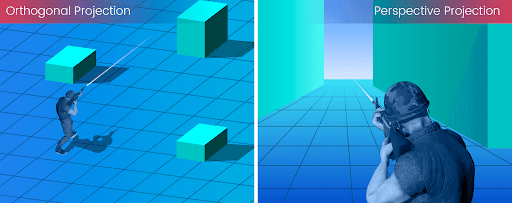

Two very popular camera projections used in games are perspective and orthographic views.

Perspective Projection

In the real world, as objects move farther, they look smaller from the point of view. This is known as the perspective effect. There is a “sense of depth” included. This effect is observed not only in case of cameras, but the human eye too. This perspective effect is extensively used in computer graphics to create scenes as realistic as possible. Unity has provisions for including perspective camera renderings in games and graphical videos you might create on its platforms.

Field of View

In other words, the camera angle becomes significant when you choose the perspective view. It is measured in degrees and signifies how much of the current view would you like to include in the shot. The degree here becomes important because we are talking about perspective view and not orthogonal. Thus, how much of the scene to cover becomes important.

Orthogonal Projection

On the other hand, sometimes one may not require a perspective effect. That is, sometimes you might need to display some information or map for which a real-world, perspective camera effect may not be required. It may not be required to diminish the objects on display with distance. This is known as orthogonal projection. Unity provides an option for this too.

Thus, with Unity you can use both perspective and orthographic camera renderings, for developing your shooter game, as and how the storyline of the game might dictate.

Size

Since what the camera “sees” and shows remains static, the size of the visible region has to be determined by the developer. In orthogonal projection, the size becomes important. Because size determines how much of a region can be viewed and not the camera angle.

Clipping planes

Clipping planes are two imaginary planes that are perpendicular to the camera’s forward direction. There are two clipping planes, the near clipping plane and the far clipping plane. The region beyond these planes is not “viewed,” hence the word clipping. The near clipping plane is close to the camera and behind it lies the region that is too close for the camera to “see”. Similarly, the far clipping plane is farthest to the camera and defines the limit beyond which the camera cannot “see”.

The visible region

In both modes, perspective and orthographic, the distance to which cameras can “see” from their current position is limited. Two planes, the far clipping plane and the near clipping plane, determine the viewable region. These planes are perpendicular to the camera’s forward direction. They are called clipping planes because regions beyond these planes are excluded from rendering. The viewable range is the region lying between the two planes.

Unity Development with Logic Simplified

We at Logic Simplified understand the nuances of developing games on the Unity engine. The great news is, we enjoy the complexity! Our dedicated game designers and developers are experts at understanding the requirements of your game idea and turning it into reality. Whether you're looking to develop a first- or a third-person shooter game, our Unity game development services cover them all! Partner with us to see how we turn your dream game into reality!

Get a Quote

Get a Quote