Introduction

Data is what that most businesses of today rely on to make critical decisions. But, is just having piles of data available at your disposal enough to be worth your salt? Naaah! The secret sauce is the way you do data processing and analysis to get structured and meaningful information so as to actually be able to act on actionable insights. Even using the new revolutionary technologies such as Artificial Intelligence (AI) and Machine Learning (ML) for smart decision making and driving business growth are like flogging a dead horse without applying the right data processing techniques. This is what today’s businesses are learning, though, slowly. That said, I am making an attempt to help you understand the importance of data processing in ML and AI algorithms so that they can do correct analysis and furnish you with information you can comprehend and use to bring that proverbial midas touch in your way of doing business.

When done right, data processing teaches ML and AI algos to work as intended

After you extract data that can be in structured, semi-structured, and unstructured form, you transform it into a usable form so that ML algorithms can understand it. But, what’s more critical here is the relevance. If the data itself is not relevant, you can’t expect from your ML algorithms to learn what would eventually make them smart and bring value to your business.

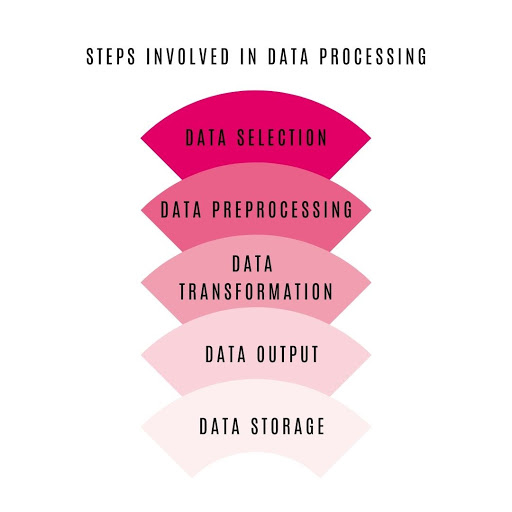

Phases of Data processing

The graphic above explains and simplifies the phenomenon of data processing for machine learning algorithms through sequential steps, elaborated below - production of actionable motive being the sole purpose of this procedure.

1. DATA SELECTION

This step involves collecting data from available sources that are trustworthy and then selecting the highest quality of the whole. In this case, remember that less is more because the focus here has to be on quality and not quantity. The other parameter to take into consideration is the objective of the task.

2. DATA PREPROCESSING

Preprocessing here means getting the data into a format that the algorithm will understand and accept. It involves -

- Formatting - There are different formats in which data could be found, such as a proprietary file format and a Parquet file format, to name a few. Data formatting makes it convenient for learning models to effectively work with data.

- Cleaning - At this step, you remove the unwanted data and fix the instances of missing data by removing them also.

- Sampling - This step is essential to save time and memory space. You need to understand that instead of picking the whole dataset, you can use a smaller sample of the whole that will be faster for exploring and prototyping solutions.

3. DATA TRANSFORMATION

Lastly, the specific algorithm you are working with and the solution that one's looking for influence the process of transformation of preprocessed data. After you upload the dataset in the library, the next step is the Transformation process. A few of the many are mentioned below.

Scaling: Scaling means the transformation of the value of numeric variables in a way that helps it fit in a specific scale like 0 - 1 or 0 - 100. This procedure ensures the data we receive has similar properties, and no odds, thus makes the outcome meaningful.

Decomposition: This process uses a decomposition algorithm to transform a heterogeneous model into a triple data model. The transformation rules here will categorize the data set into structured data, semi-structured data, and unstructured data. Subsequently, we can pick the category that suits our model's ML algorithm.

Data Aggregation Process (DAP): The raw dataset is aggregated through an aggregator with the purpose of locating, extracting, transporting, and normalizing it. This process may undergo multiple aggregations to bring up aggregated data, which may either be stored or carried out further for other operations. This process directly impacts the quality of the software system.

4. DATA OUTPUT & INTERPRETATION

In this, meaningful data is obtained as an output in various forms as one prefers. It could be a graph, video, report, image, audio, etc. The process involves the following steps:

- Decoding the data to an understandable form, that earlier was encoded for the ML algorithm.

- Then, the decoded data is communicated to various locations that are accessible to any user at any time.

5. DATA STORAGE

The final step of the entire process is where data or metadata is stored for future use.

Difference between a regular computing program and AI

Let’s take you through a simple example:

Let’s say, an AI is given marks of 10 students in a class (1, 3, 5, 6, 8, 9, 12, 7, 13, 100). Based on that, I ask it a question, "How will you rate the overall class on a scale from A-E (based on slabs like 0-20 is E, 21-40 is D and so on)?".

The difference between a regular computer program and AI is the same as the two men in this saying, "Give a man a fish and he'll eat for a day. Teach a man to fish, and he'll eat for his lifetime.” The first man is like a regular program that does not learn on its own and will give an output only on providing input data. Still, on the other hand, AI is the man you teach "how" once, and then it learns and improves on its own and gives the desired output with rules and methods of what to do with certain kinds of data and how. The way it learns is ML (Machine Learning or Machine Intelligence).

A regular program may take an average of 10 marks and rate it based on that. Nevertheless, an AI will be able to identify the outlier (100 in this case) and then give us the answer, which clearly shows the world of difference between the two computer programs and how Artificial Intelligence gets ahead of all with the help of Machine Learning.

GIGO

We train a machine learning model based on the output we expect from it. And, the data that we provide to the AI algorithm determines this. If the data provided is inappropriate, then the information it would give us would be worthless. The strict Logic that computers work on is the compatibility between the input and the output. The quality of data provided (input) determines the quality of information we will receive (output). In other words, Garbage in, garbage out (GIGO).

Logic Simplified has done much work in Artificial Intelligence and Machine Intelligence, and understands the critical role and importance of data processing. Being the driver for different other technologies, we know that AI and ML will impact the future of every industry and humans in many expected and also unexpected ways. Let our AI programmers help you make an impact in your world - to ensure enhanced productivity, escalating profits, reduced time consumption, enhanced security throughout the process, prevention of unauthorized access, and so much more - by making your systems smarter.

Get a Quote

Get a Quote